Introduction

Have you ever wondered if there is a way for you to access your web surfing history information directly? In other words, not just viewing it through your browser's built-in History menu. Maybe you want to calculate some surfing statistics. Perhaps you want to determine which websites you spent the most time on. Or how much time you spent on each?

I did some digging and came up with this intro-level guide. I only touch on the basics, but this should be enough to get anyone started.

I use Brave browser, powered by the same engine as Google Chrome. So the information presented here will also apply to the Chrome browser and probably to any Blink-based browser. The location of the history database may differ in some cases.

The location of the history information

The first question is: Where does Brave Browser store the history information?

Stepping back a bit, it is essential to know that Brave Browser is based on the Blink rendering engine. Which, in turn, is a fork of the WebCore component of Webkit.

And it turns out that all webkit-derived browsers store their history information inside a SQLite database, named History. The exact location of this database file depends on the relevant operating system and which version of Brave is installed. Below are some example locations of some of my lab environments.

Some examples of the database's possible location are shown below:

In Linux OS = /home/user/.config/BraveSoftware/History

Installed as a Snap in Ubuntu Linux 20.06 =/home/user/snap/brave/228/.config/BraveSoftware/Brave-Browser/Default/History

In Windows 10 OS = C:/Users/name/AppData/Local/BraveSoftware/Brave-Browser/User Data/Default/History

Exploring the History database with DB Browser for SQLite

Important: If you are new to this, DO NOT work with the live database. I recommend you make a copy of the database file, and run your code against the copy.

I used DB Browser for SQLite to explore the History database initially. It provides a very user-friendly GUI interface, making exploring a database straightforward, especially if you are unfamiliar with a 3rd party database.

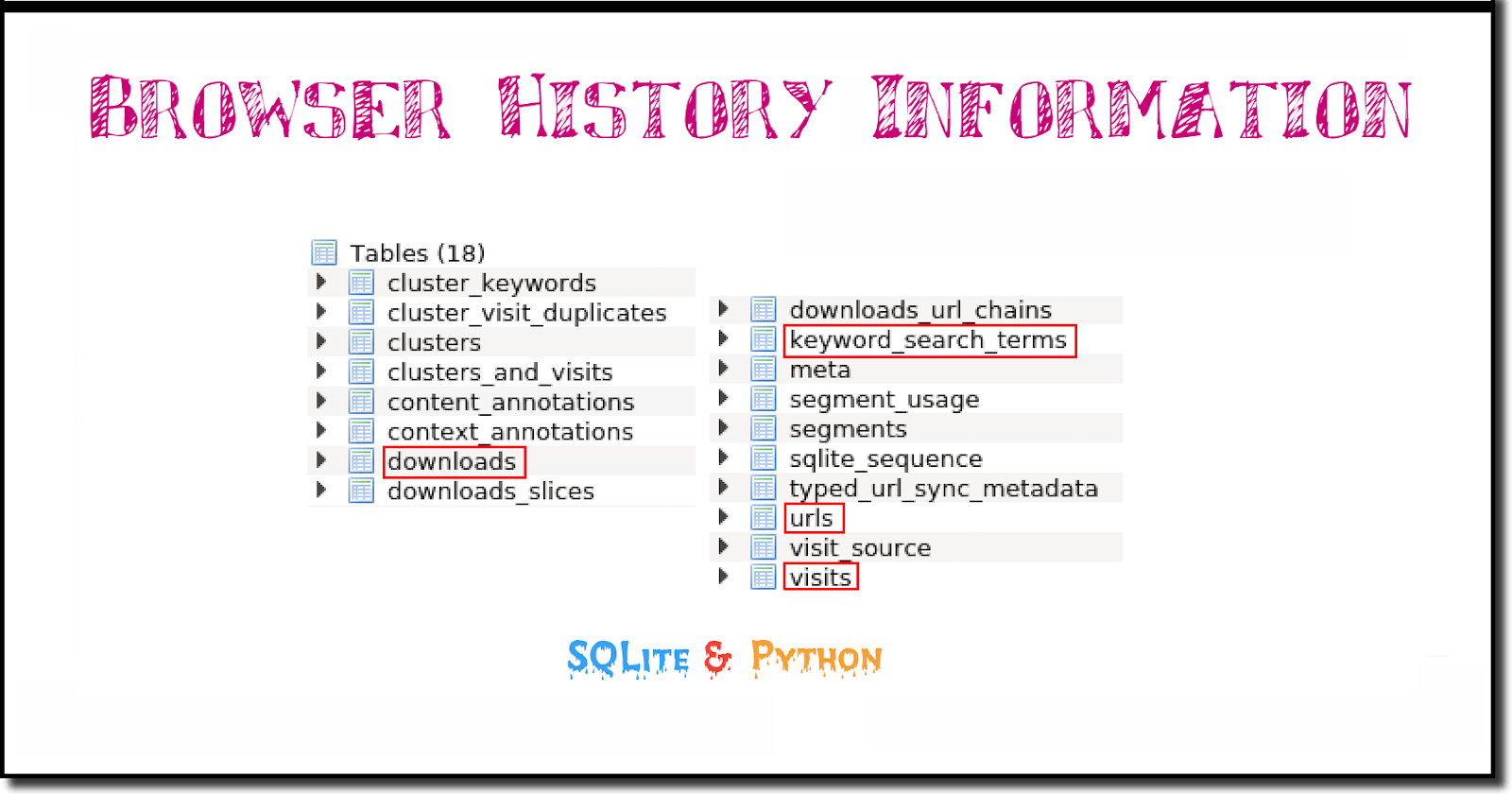

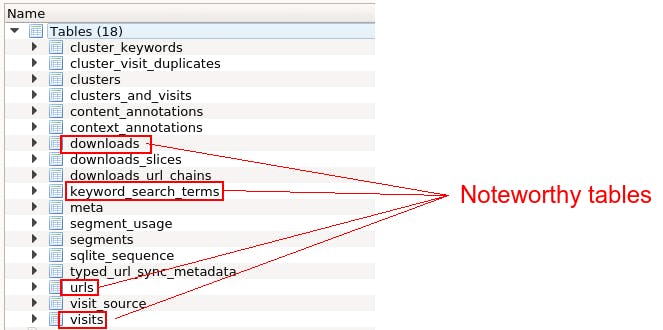

Below is a list of all the tables in the History database.

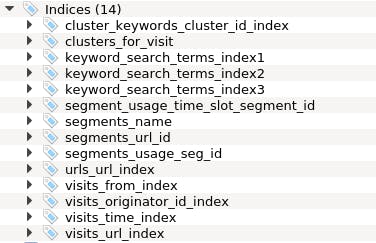

Below is a list of all the table indices in the History database.

Interesting looking tables

As you can see, the History database contains several tables. But I will highlight only some interesting-looking ones: downloads, keyword_search_terms, URLs and visits.

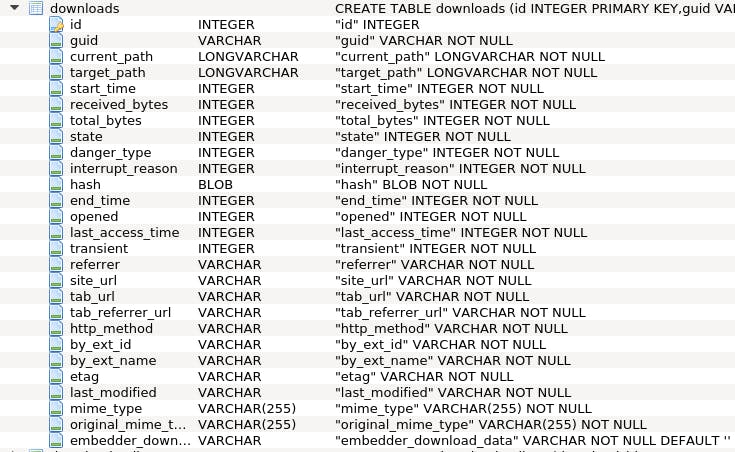

Below is the layout of the tables, including their field definitions.

The downloads table layout:

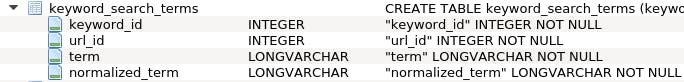

The keywords_search_terms table layout:

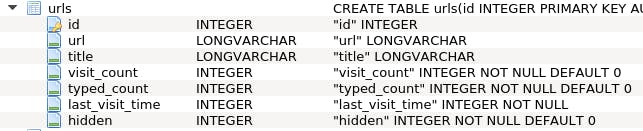

The urls table layout:

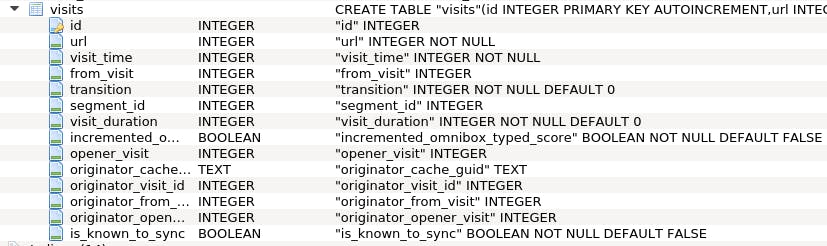

The visits table layout:

Mine some data with Python 3 & SQL

Now that we have identified some tables and fields, let's run some queries using Python.

Launch your Python IDE of choice, and connect to the database. I use the Python standard IDLE in the following examples.

I use a function to beautify the database cursor output. This function is stored inside a module named pretty_printer.py, which I import before connecting to the database. Below is a copy of this function's code.

Pretty printer function

Below is the function I use to beautify the database cursor output.

(I found the function on the web some time ago but lost the link to it. So I am unable to credit the source).

# A function to beautify the database cursor output

def pretty_print_cursor(cursor):

columns = [desc[0] for desc in cursor.description]

rows = cursor.fetchall()

column_widths = [max(len(str(row[i])) for row in rows + [columns]) for i in range(len(columns))]

format_string = ' | '.join('{{:<{}}}'.format(width) for width in column_widths)

print(format_string.format(*columns))

print('-' * (sum(column_widths) + (3 * len(columns) - 1)))

for row in rows:

print(format_string.format(*row))

First, import the libraries used in the following examples:

>>> import sqlite3

>>> import pretty_printer

Connect to a copy of the History database (I placed mine in the /tmp folder):

>>> con = sqlite3.connect('/tmp/History')

>>> cur = con.cursor()

Query the downloads table

SQL:

cur.execute('select target_path, datetime(start_time/1000000-11644473600, "unixepoch") as Start_Time, received_bytes from downloads limit 5')

Beautify the output:

>>> print(pretty_printer.pretty_print_cursor(cur))

Output:

target_path | Start_Time | received_bytes

---------------------------------------------------------------------------------------------------------------

/home/cooltool/Downloads/putty-64bit-0.78-installer.msi | 2023-05-03 12:08:14 | 3705856

/home/cooltool/Downloads/putty-0.78.tar.gz | 2023-05-03 12:08:30 | 2811628

/home/cooltool/Downloads/E93615.pdf | 2023-05-03 12:14:42 | 185060

/home/cooltool/Downloads/Oracle Open Source Support Policies.pdf | 2023-05-03 12:15:34 | 736009

/home/cooltool/Downloads/DB_Browser_for_SQLite-v3.12.2-x86_64.AppImage | 2023-05-05 12:18:01 | 38727720

Query the keyword_search_term table

SQL:

>>> cur.execute('select keyword_id, url_id, term from keyword_search_terms where term like "oracle%"')

Beautify the output:

>>> print(pretty_printer.pretty_print_cursor(cur))

Output:

keyword_id | url_id | term

-------------------------------------------------------------

6 | 14 | oracle support login

6 | 18 | oracle linux 9 end of life

6 | 20 | oracle solaris 11.4 end of life

6 | 22 | oracle sparc t4-1 server end of life

3 | 61 | Oracle database latest version

3 | 71 | oracle vm server for x86 end of life

3 | 81 | oracle solaris 11.4

3 | 82 | oracle sparc t4-1

3 | 83 | oracle sparc t4-1 datasheet

3 | 86 | oracle sun server x4-2 datasheet

3 | 88 | oracle sun server x4-2 ilom datasheet

Query the urls table

1). Retrieve a few URL titles and the total number of times the sites were visited.

SQL:

>>> cur.execute('SELECT title, visit_count FROM urls limit 5')

Beautify the output:

>>> print(pretty_printer.pretty_print_cursor(cur))

Output:

title | visit_count

-----------------------------------------------------

Google | 4

bitcoin price usd - Google Search | 2

Bing | 2

Bing | 2

the weather in new york city - Search | 1

2). When did you visit a specific site?

Blink-based browsers implement the WebKit timestamp format. Refer to the below example and the last_visit_time field values.

Note: In section 3, I show you how to convert this timestamp into a human-readable format using SQL.

SQL:

>>> cur.execute('select title, url, last_visit_time from urls where title = "Bing" limit 5')

Beautify the output:

>>> print(pretty_printer.pretty_print_cursor(cur))

Output:

title | url | last_visit_time

----------------------------------------------------------------------

Bing | https://bing.com/ | 13327589225022830

Bing | https://www.bing.com/?toWww=1&redig=EF | 13327589225022830

3). Converting WebKit format timestamps

In the previous output, the last_visit_time field contains a timestamp that is in the WebKit timestamp format. This is a 64-bit value expressing microseconds since 1 Jan 1601 00:00 UTC. One microsecond is one-millionth of a second.

In the following example, I show you how to convert the WebKit timestamps to a human-readable format.

SQL:

>>> cur.execute('select datetime(last_visit_time/1000000-11644473600, "unixepoch") as Human_Readable_Time from urls limit 5')

Beautify the output:

>>> print(pretty_printer.pretty_print_cursor(cur))

Output:

Human_Readable_Time

---------------------

2023-05-15 07:03:00

2023-05-05 12:16:30

2023-05-03 12:07:05

2023-05-03 12:07:05

2023-05-03 12:06:05

To learn more about Epoch & Unix Timestamps, and Conversion Toolsc, visit this site: https://www.epochconverter.com/

Conclusion

I touched on the basics only. However, this information should be enough to get anyone started with the history data, experiment, and write a custom script or two.